Table of contents

Hey 👋, welcome to my little world! Let's bypass Cloudflare easily with Python!

While scraping websites you may come across some sites that are using Cloudflare protections that make them much more difficult to scrape like Opensea and you can't directly scrape their content.

Today, we shall use the cloudscraper package that is available on PyPI and with this tool, we are able to bypass Cloudflare.

🔸 What is Cloudflare?

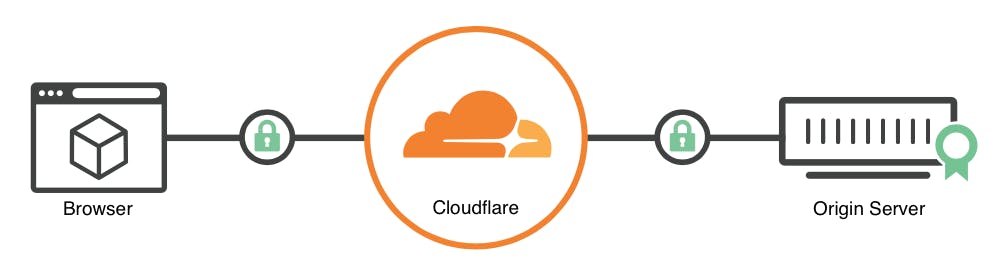

Cloudflare, Inc. is an American web infrastructure and website security company that provides content delivery network and DDoS mitigation services.

Its services occur between a website's visitor and the Cloudflare customer's hosting provider, acting as a reverse proxy for websites.

Its services occur between a website's visitor and the Cloudflare customer's hosting provider, acting as a reverse proxy for websites.

🔸 The Code

We shall demonstrate this on Opensea NFT Collection Stats page.

pip install cloudscraper

A simple Python module to bypass Cloudflare's anti-bot page (also known as "I'm Under Attack Mode", or IUAM), implemented with Requests.

I love this package because it is actively being updated and developed & it has over 1600 Stargazers!

Let's import them in our newly created cloudpass.py file

from bs4 import BeautifulSoup as beauty

import cloudscraper

Let's create a cloud scraper instance and define our target URL;

scraper = cloudscraper.create_scraper(delay=10, browser='chrome')

url = "https://opensea.io/rankings"

We initialised it with a browser argument and a delay time period, you can omit these.

Let's now scrape our target;

info = scraper.get(url).text

soup = beauty(info, "html.parser")

soup = soup.find_all('script')

The text method returns the text from the scraper response, we then create a soup using html.parser in order to be able to find the particular data where our data is residing.

And finally, we can loop through to get our scraped data which is a nested dictionary. I will be writing another blog on how you can later dump this into a CSV file.

for data in soup:

print(data.get_text())

GitHub Repo:

That's it!

🔸 Conclusion

Once again, hope you learned something today from my little closet.

Please consider subscribing or following me for related content, especially about Tech, Python & General Programming.

You can show extra love by buying me a coffee to support this free content and I am also open to partnerships, technical writing roles, collaborations and Python-related training or roles.

📢 You can also follow me on Twitter : ♥ ♥ Waiting for you! 🙂

📢 You can also follow me on Twitter : ♥ ♥ Waiting for you! 🙂